Louis de Vitry

Co-Founder & CTO

Antoine Labatie, PhD

Lead Computer Vision Engineer

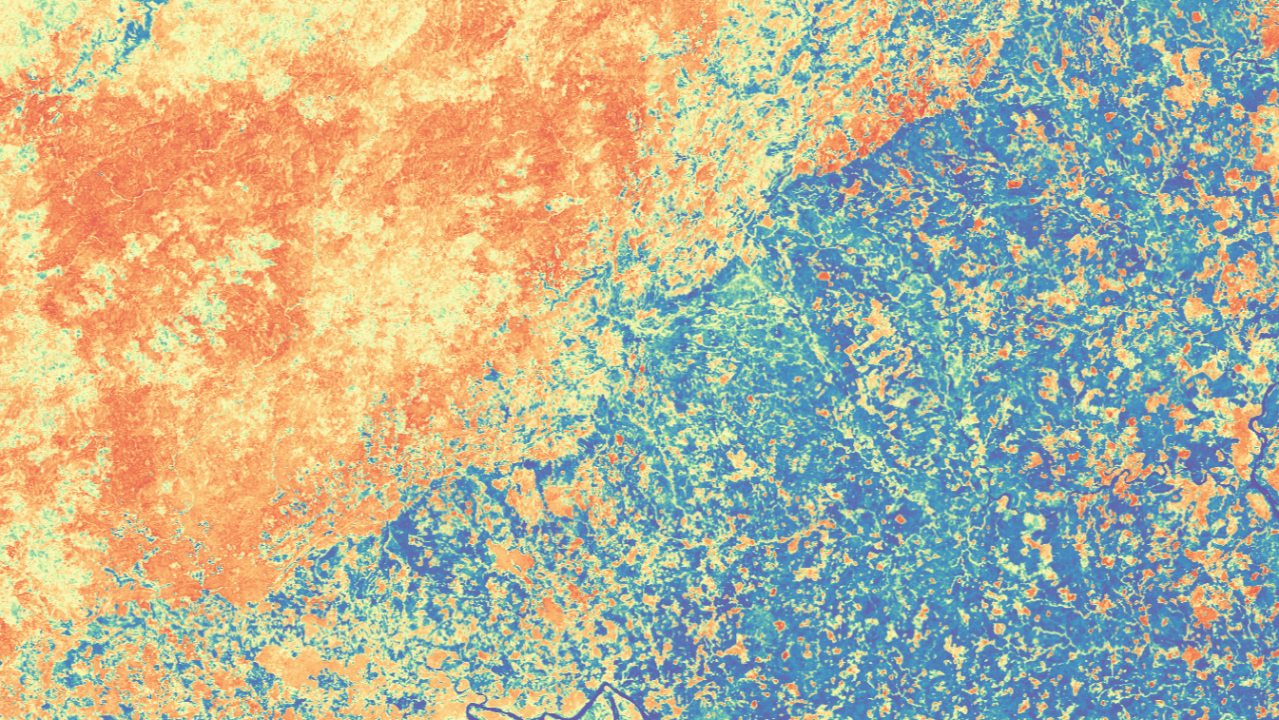

Remote sensing has potential for measuring nature-based carbon solutions, but faces challenges with input data sources, ground truth data scarcity, and model development. Innovative approaches like generative AI and few-shot learning could help overcome these bottlenecks and unlock nature's potential in carbon markets.

Remote sensing imagery has the potential to play a critical role in expanding carbon markets and fostering trust. However, measuring the impact of nature-based solutions using this technology presents significant challenges.

The first complexity lies in the sources of input imagery provided to the models. Choices must be made to leverage the vast amount of remote sensing imagery available, particularly with the advancements in new space technologies. The diversity and richness of input imagery calls for the development of models that fuse the processing of time series of multispectral and Synthetic Aperture Radar (SAR) imagery. Such models would gather information related to seasons and phenologies through multi-temporality, while at the same time gather rich spectral (multispectral imagery) and structural information through multi-modality.

A second complexity relates to the ground-truth data bottleneck. The performance of AI models is dependent on the abundance and quality of the available ground-truth data. For forest indicators such as canopy height and tree height, large LiDAR surveys are becoming increasingly available on a per-country basis. The challenge in using these surveys lies in the statistical extrapolation from a few training countries to a potentially global coverage. For end-use forest indicators such as above ground biomass or carbon, the difficulty is even starker, with a dilemma often posed between data quantity and quality. On the one hand, some datasets provided by ESA or NASA are extensive but only derived from indirect modelling with respect to remote sensing data. On the other hand, smaller and localised datasets constituted from ground data are typically of higher quality but difficult to scale in size.

To overcome this bottleneck, there is a need for innovative learning paradigms. One highly promising line of exploration lies in generative AI approaches, which can reduce the dependence on ground truth data.

Generative AI models undergo a two-step training process. They start by learning on very large datasets of input imagery without using associated ground truth data. During this stage, the models extract meaningful patterns and representations from the input data itself. Subsequently, the models progress to a supervised learning phase, where they undergo "fine-tuning" using smaller datasets that contain both input imagery and corresponding ground truth data.

The fine-tuning step of generative AI training could be further divided into several sub-steps. For example, the model could undergo an initial fine-tuning on a large dataset with low-quality ground truth data, followed by a second fine-tuning on a smaller dataset with high-quality ground truth data. Taking the refinement process even further, the models could be fine-tuned using high-quality ground truth data specific to the region or area of interest. This localised fine-tuning would be best achieved through few-shot learning, which is closely related to generative AI.

Feel free to contact us to explore collaboration opportunities in this field.